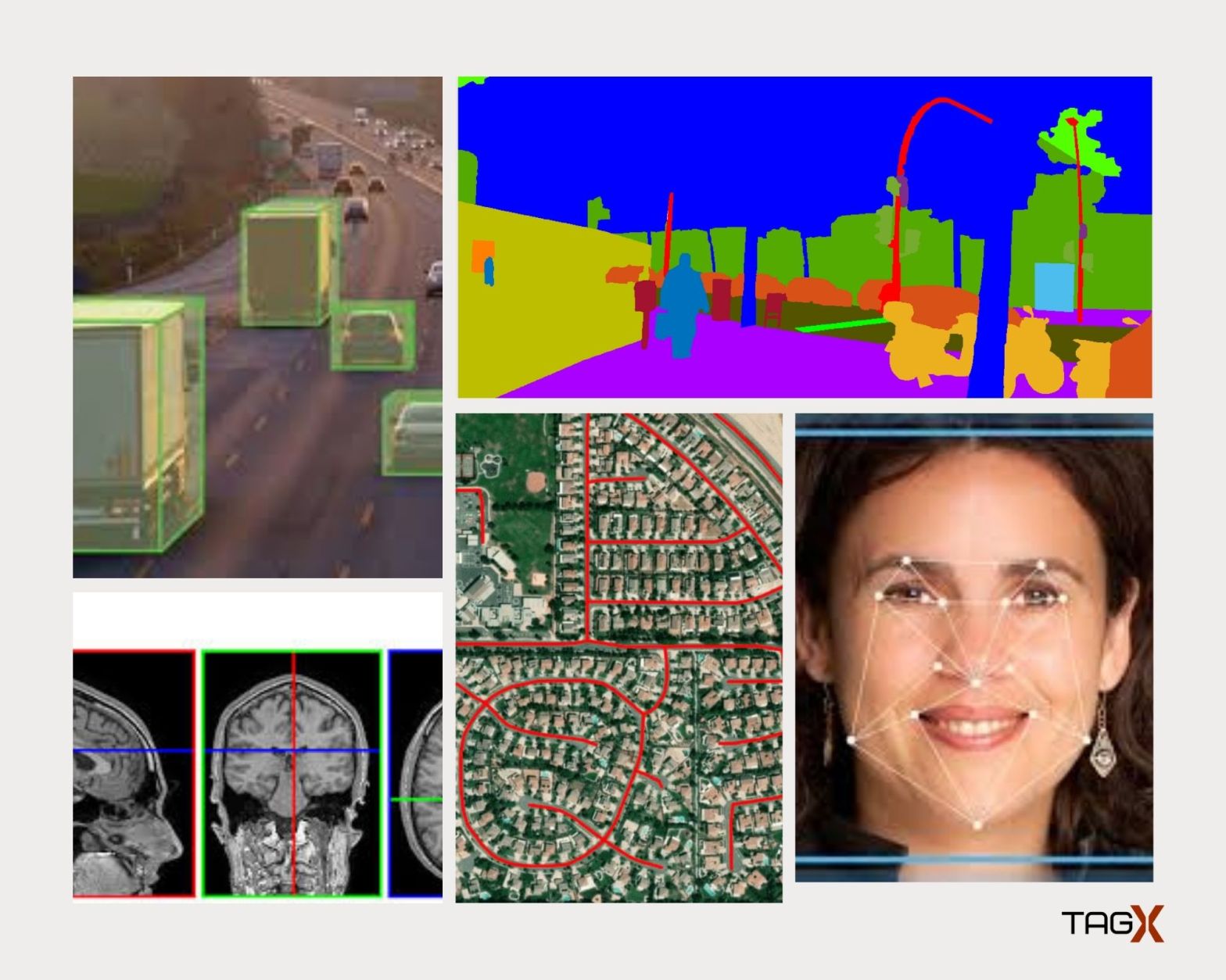

Computer vision algorithms are no magic. They need data to work, and they can only be as good as the data you feed in. The development of Computer vision algorithms depends on large volumes of data, from which the learning process draws many entities, relationships, and clusters. To broaden and enrich the correlations made by the algorithm, it needs data from diverse sources, in diverse formats, about diverse business processes.

Collecting and preparing the dataset is one of the most crucial parts while creating an ML/AI project.The technology applied behind any Machine learning projects cannot work properly if the dataset is not well prepared and pre-processed.These are different sources to collect the right data, depending on the task. Given below we have shared four different ways in which you can acquire data for your model.

1. Get Open Datasets

Public datasets come from organizations and businesses that are open enough to share. These are easily accessible and available to use, typically online. Individuals, businesses, governments, and organizations created them. Some are free, and others require the purchase of a license to use the data. Open data is sometimes called public or open source but it generally cannot be altered in its published form. It is available in various formats (e.g., CSV, JSON, BigQuery). Explore Kaggle, Google Dataset Search, and other resources to find what intrigues you.

Some open data sets are annotated, or pre-labeled, for specific use cases that may be different from yours. For example, if the labeling does not meet your high standards, that could negatively impact your model or require you to spend more resources to validate the annotations than you would have by procuring the right-fit data set in the first place.While those opportunities exist, usually the real value comes from internally collected golden data nuggets mined from the business decisions and activities of your own company.

2. Collect or Create your own Dataset

You can build your own data set using your own resources or services you hire. You can collect data manually, using software tools, such as web-scraping tools. You also can gather data using devices, such as cameras or sensors to take pictures and videos of scenarios you wish to train your model upon . You may use a third party for aspects of that process, such as the building out of IoT devices, drones, or satellites.

You can crowdsource some of these tasks to gather ground truth, or to establish real-world conditions. If you know the tasks that machine learning should solve, you can tailor a data-gathering mechanism in advance. You need to allocate a pool of resources to understand the nature of training and test data and manually collect it from different resources.Usually, collecting data is the work of a data engineer, a specialist responsible for creating data infrastructures. But in the early stages, you can engage a software engineer who has some database experience.

3. Outsource to a third Party Vendor.

Here, you work with an organization or vendor who does the data gathering for you. This may include manual data gathering by people or automated data collection, using data-scraping algorithms. This is a good choice when you need a lot of data but do not have an internal resource to do the work. It’s an especially helpful option when you want to leverage a vendor’s expertise across use cases to identify the best ways to collect the data.

Developing such capacity to carry out this work in-house presents a number of challenges for technology companies. Outsourcing to dedicated data collection services can help solve a number of these problems. TagX provides a professional, managed data collection and annotation service that meets your demands for accuracy, flexibility and affordability.

4. Generate Synthetic data

Synthetic data Generation focuses on visual simulations and recreations of real-world environments. It is photorealistic, scalable, and powerful data created with cutting-edge computer graphics and data generation algorithms for training. It’s extremely variable, unbiased, and annotated with absolute accuracy and ground truth, eliminating the bottlenecks that come with manual data collection and annotation.

Since synthetic data is generated from scratch, there are basically no limitations to what can be created; it’s like drawing on a white canvas. It reduces the need to capture data from real-world events, and for this reason it becomes possible to generate data and construct a dataset much more quickly than a dataset dependent on real-world events. This means that large volumes of data can be produced in a short timeframe. This is especially true for events that rarely occur, as if an event rarely happens in the wild, more data can be mocked up from some genuine data samples. TagX is also expanding its expertise in Synthetic Data Generation to provide you with large volumes of dataset specifically for your model requirement.

Conclusion

There are many ways to get training datasets. At TagX we can help you in deciding the best approach to procuring datasets for your AI applications.

Book a consultation today at http://www.tagxdata.com