A chatbot is a piece of software that has been programmed to conduct a conversation. We can think of chatbots as Natural Language Processing systems that can respond to human communication. This communication can be via different modes like text, voice, or a combination of the two.

A chatbot is an AI-powered software that can effectively communicate with people in a lucid and intelligent manner. A chatbot stimulates communication through messaging applications, websites, mobile apps, or smartphone assistants like Ok Google or Siri. Thus, a chatbot represents the closest interface of communication between a man and a machine.

Importance of chatbot

The world has undergone a revolution in the sphere of communication. It is pertinent to mention that to meet customers’ required demands, the manpower available is insufficient. This is where chatbots enter the market. By virtue of their intelligible communicating capability, they are able to deliver customer satisfaction that would otherwise not have been possible. In this way, chatbots serve the dual benefits of customer engagement and operational efficiency.

The main purpose of a chatbot is to reduce the workload that the industries are burdened with currently. In the current times, the need for a chatbot is most vital. This is because a chatbot is available all the time irrespective of local time and geographic location. It should also be mentioned that a chatbot is less prone to errors and delivers commendable customer satisfaction round the clock.

What is Chatbot Training Data?

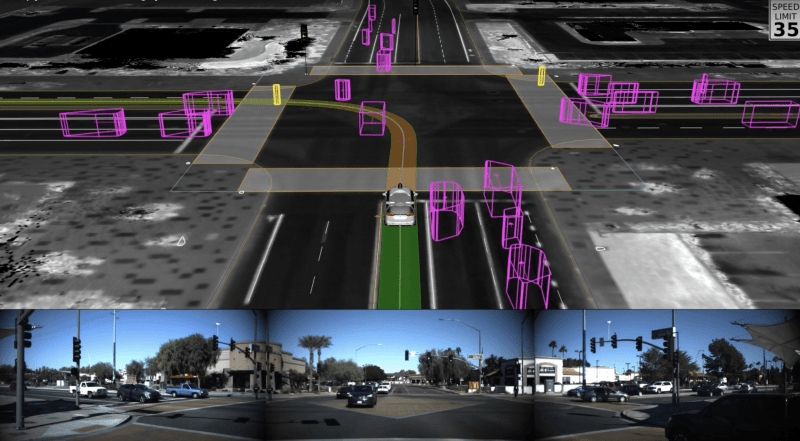

A chatbot needs data for two main reasons: to know what people are saying to it, and to know what to say back. An effective chatbot requires a massive amount of training data in order to quickly resolve user requests without human intervention. However, the main obstacle to the development of chatbots is obtaining realistic and task-oriented dialog data to train these machine learning-based systems.

Fundamentally, a chatbot turns raw data into a conversation. Consider a simple customer service bot. The chatbot needs a rough idea of the type of questions people are going to ask it, and then it needs to know what the answers to those questions should be. It takes data from previous questions, perhaps from email chains or live-chat transcripts, and from previous correct answers, maybe from website FAQs or email replies. All of this data, in this case, is training data.

Open source data

The best chatbots need a massive amount of training data to be useful. Just think about the number of conversations you have every day and how each of those differs in context. In an ideal world, a chatbot would need to account for all those conversational variations. Even if you have a lot of your own data, there are a few open source datasets that are free to use, thus allowing you to add to your knowledge base. Some examples of open source training data include:

- The WikiQA Corpus – a publicly available set of question and sentence pairs. It uses Bing query logs and links to Wikipedia pages.

- Yahoo Language Data – Curated question and answer datasets from Yahoo Answers

- Ubuntu Dialogue Corpus. Around 1 million two-person conversations extracted from chat logs used to receive technical support

- Twitter Support – over 3 million tweets and replies from the biggest brands on Twitter

- CoNLL 2003 dataset – for training named entity recognition models

There are hundreds of examples like these that can be incorporated into your training data to optimize it as best as possible.

Collecting your own data

Unique data are valuable assets for a chatbot. Whilst open source training data is useful as a starting point, you need to ensure your chatbot learns quickly. One method is by creating your own “chatbot”. Enough data needs to be collected from real chatbot usage to create an effective chatbot, however, the chatbot has to be effective in the first place before people actually start to use it.

It’s not only chat data that can be loaded into the bot. Chatbots need a lot of specific training data to learn how to respond effectively to different human interactions. So, to create an effective chatbot, you first need to collect and annotate information, which can come from your company’s FAQ web pages, customer service tickets and chat scripts, call logs, help email accounts, and other written sources. You can also get information about chatbot training directly from the personal knowledge of the sales representatives. These data can give an added edge to any open source training data by providing a business context.

Labeling Data

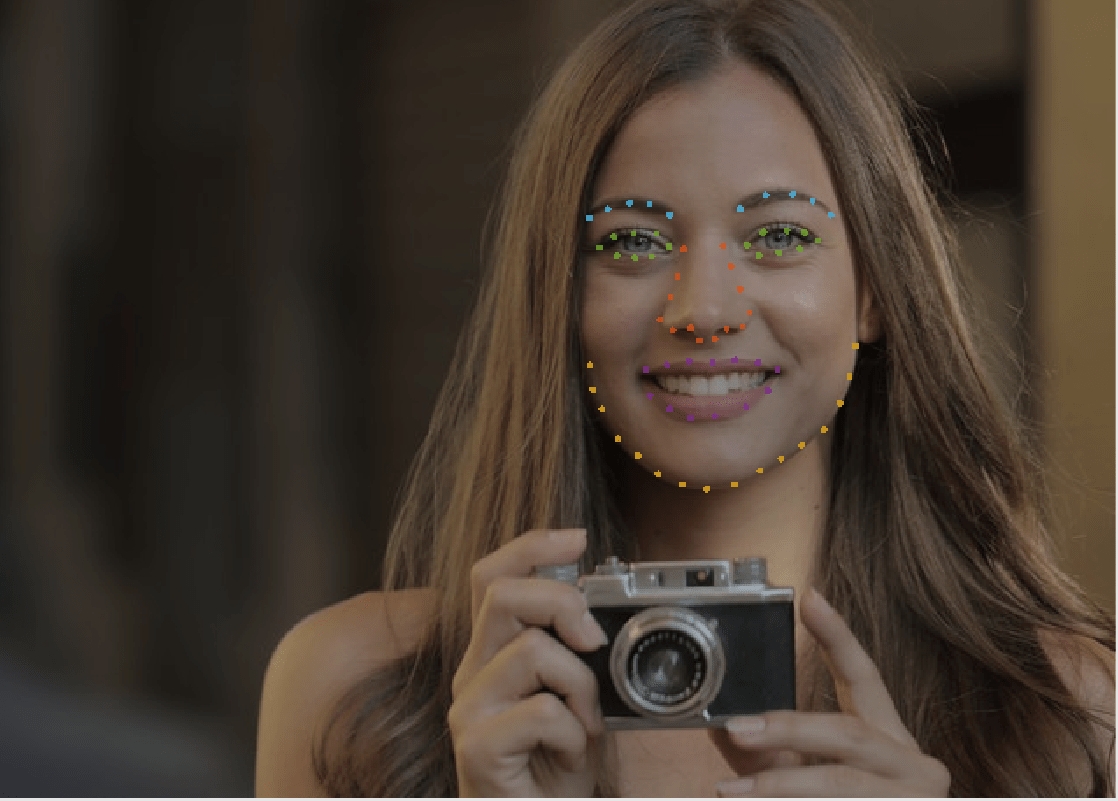

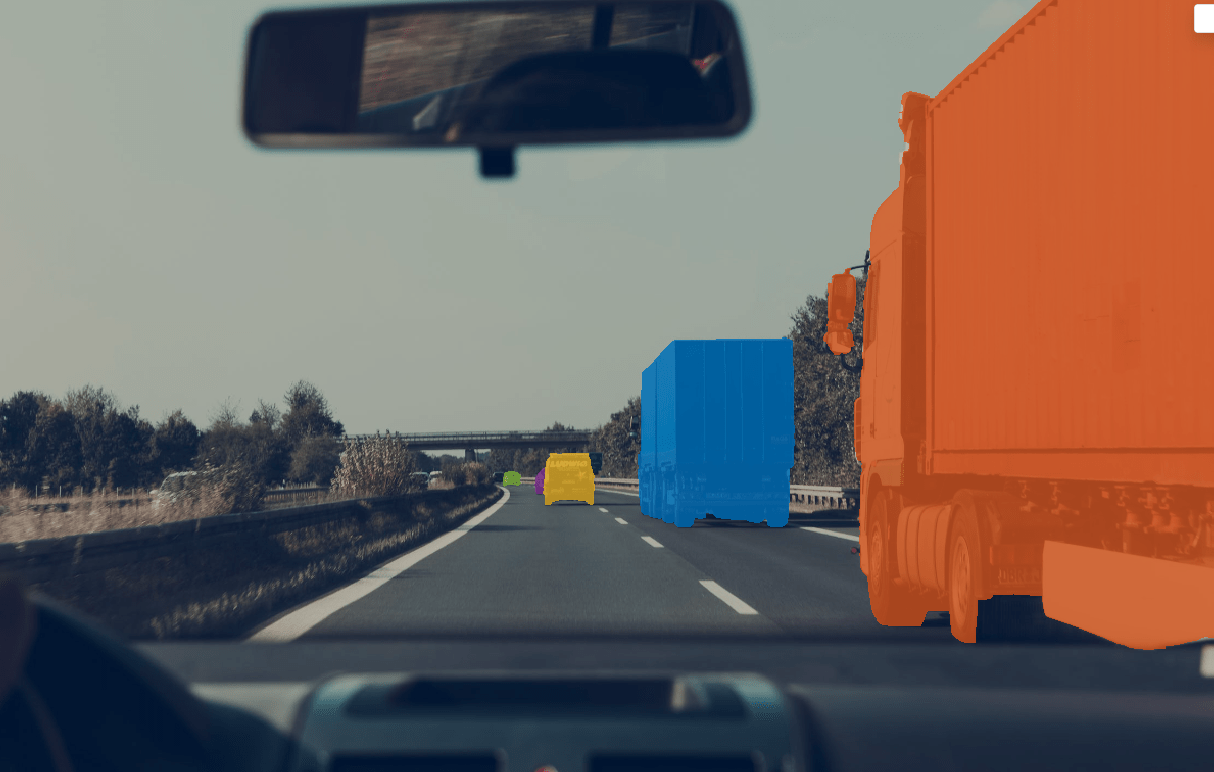

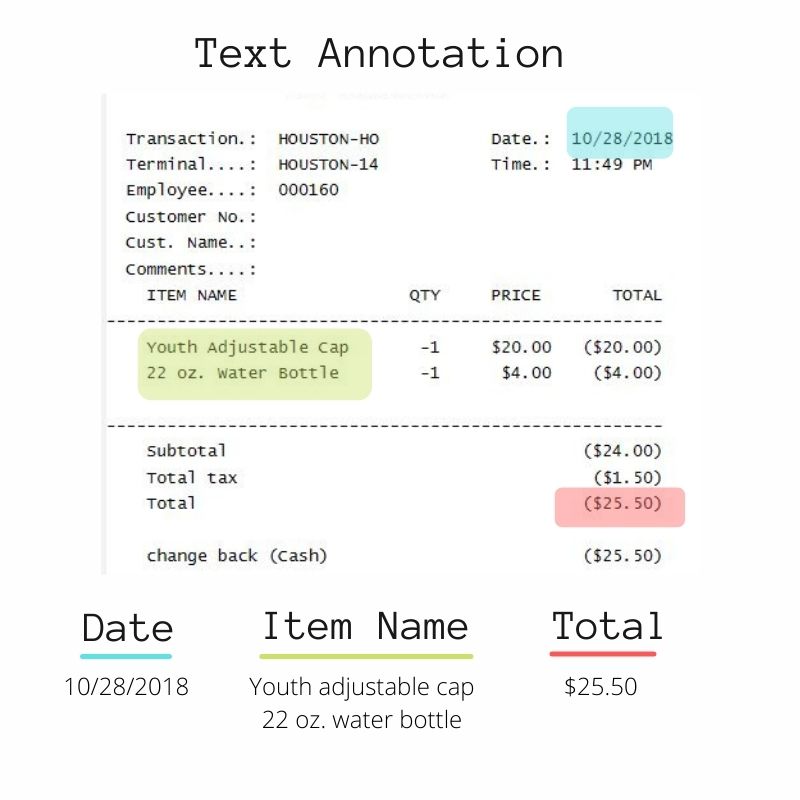

In most cases, human intervention is required to create labels for chatbot user intents. Data annotation will give chatbots the capabilities to react to a question accurately, whether it is vocalized or typed.

For example, somebody would label “Hi, Hello, Hey, Howdy, Hallo, and Good morning” as Greetings so that the chatbot can deliver an appropriate response for each of those collectively and negate gaps in the data.

Labeling can be a full-time job as new words need to be added into categories once picked up by a chatbot conversation. If somebody decided to use “Good afternoon”, it would need to be manually added to the greetings label for the chatbot to recognize it as a greeting in the future. Chatbots need intent classification, entity extraction, relationship extractions, syntactic analysis, sentiment analysis, and sometimes even translation.

TagX for Chatbot Training

To make the Chatbot smarter and more helpful, it is important to feed the AI algorithm with more accurate and high-grade training data sets. TagX has significant experience in gathering, classifying, and processing different types and quality of chatbot training data sets to make such self-propelled applications more effective. We ensure to provide the best virtual customer service with just a few seconds of interaction. We provide you with exceptional, relevant data sets to train your chatbots for them to solve customer queries and take appropriate actions as and when required.