Space exploration has long sparked the interest of scientists and governments all over the world since it contains the secret to mankind’s origins as well as many other marvels of the universe.

Imagine how easy it would be for scientists and explorers to attain their goals and how it would affect our lives if we combined the ideas of these two gigantic phrases, AI and Space Exploration, keeping in mind recent breakthroughs in the field of machine learning and artificial intelligence.

In space exploration, AI has shown enormous promise in areas such as global navigation, earth observation, and communications back and forth. Machine learning algorithms have already been utilized in spacecraft monitoring, autonomous navigation, control systems, and intelligently detecting things in the route.

Artificial Intelligence for Space Applications

Artificial intelligence (AI) is leading the way in bringing us closer to the stars. This has various advantages in our everyday life. Space data is incredibly useful for a variety of purposes, including transportation and navigation. Artificial intelligence-enabled robots are assisting us in collecting and processing this vital data so that it may be used in the appropriate places.

Earth Observation

Satellite imaging combined with artificial intelligence may be used to monitor a variety of environments, from urban to hazardous. This can aid in bettering urban planning and locating the greatest development sites. It can also assist us in finding new routes, allowing us to travel more quickly and efficiently.

Satellites can also monitor regions of particular concern, such as deforestation. The information gathered by this technology can aid researchers in monitoring and care for them. Satellites can also be used to monitor dangerous locations, such as nuclear power plants, without the need for people to enter.

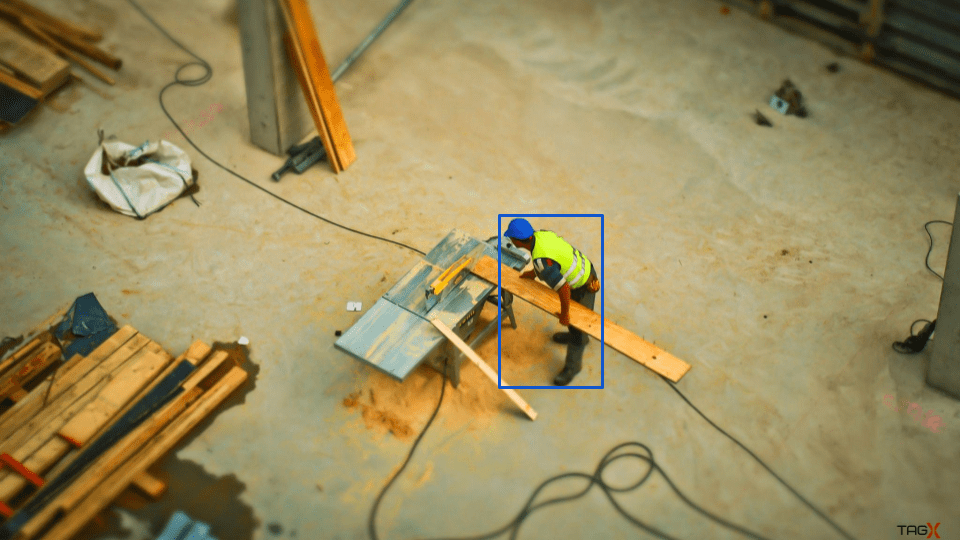

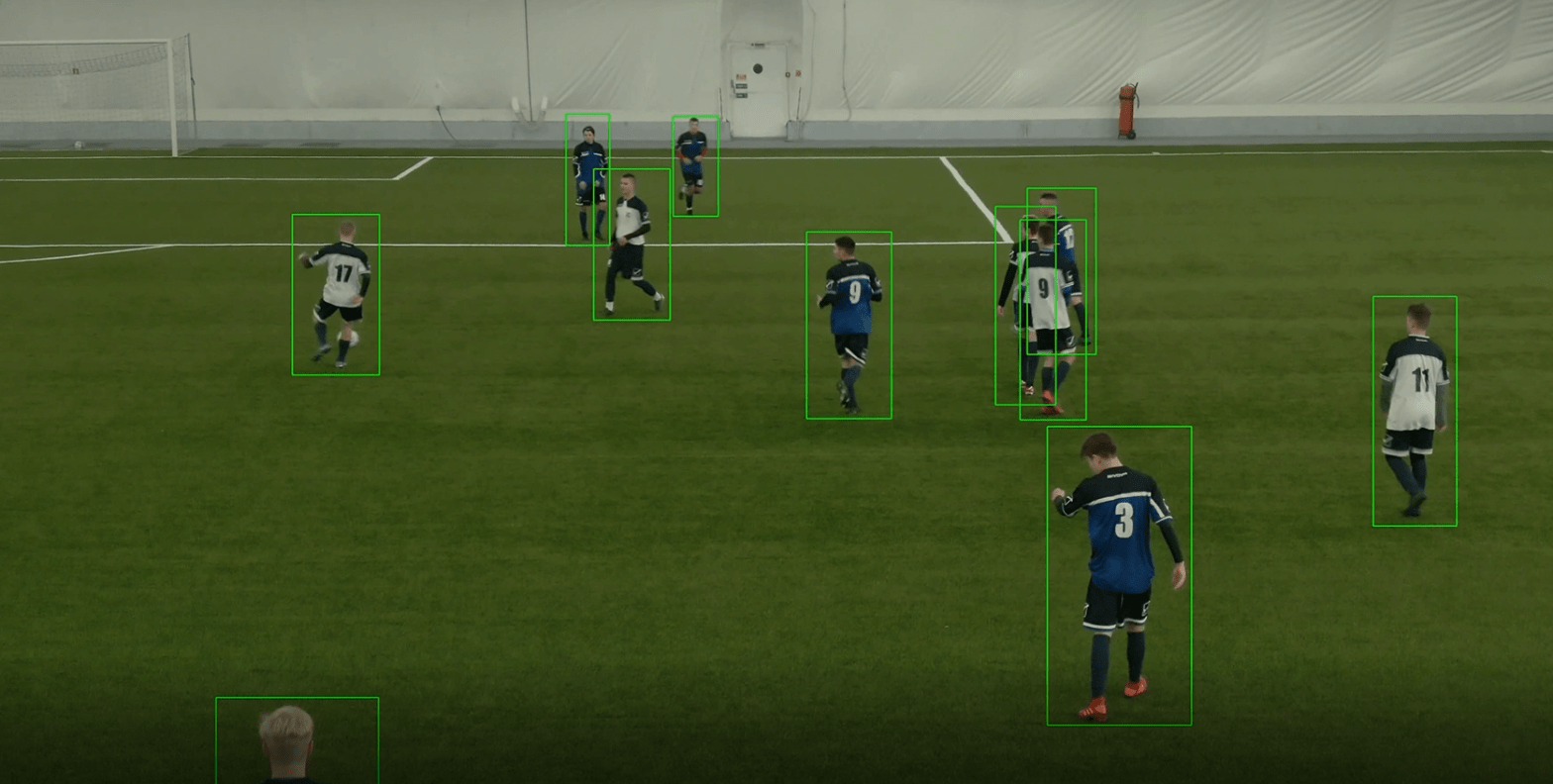

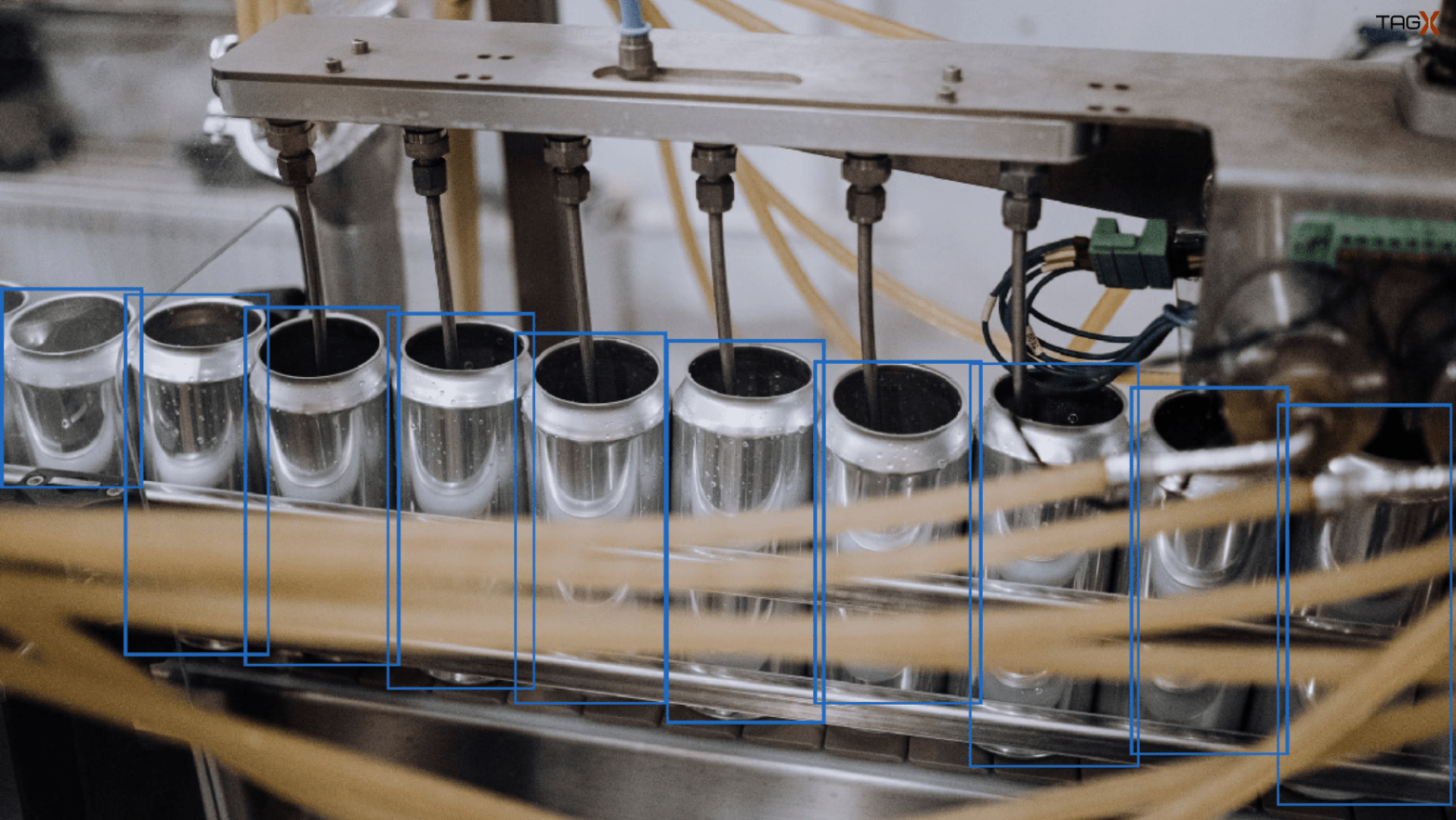

Data collected by space technology is structured with Data Annotation techniques and processed by AI machines is fed back to the industries that require it, allowing them to proceed accordingly.

Satellite Monitoring

Satellites and spacecraft, like any other complex system, necessitate extensive system monitoring. From minor faults to collisions with other orbiting objects, the list of potential issues includes a wide range of possibilities, from the most foreseeable to the most implausible. Scientists utilize AI systems that continuously analyze the functioning of various sensors to keep track of the state of artificial satellites. Such systems are capable of not only informing ground control of any problems but also of resolving them on their own.

SpaceX, for example, has fitted its satellites with a sensor and mechanism system that can track the device’s position and modify it to prevent colliding with other objects.

Spacecraft, probes, and even rovers are all using artificial intelligence to navigate. Experts claim that the technology utilized to manage these gadgets is quite similar to the systems that assure autonomous car movement. Artificial intelligence also uses integrated data from a system of sensors and maps to track numerous characteristics outside of our globe.

Communication

Communication between Earth and space, in addition to maintaining spacecraft operating, can be difficult. There may be numerous communications obstacles that a satellite must overcome depending on the state of the atmosphere, interference from other signals, and the environment. Artificial intelligence is now being utilized to assist in the control of satellite communication in order to overcome any transmission issues.

These AI-enabled systems can figure out how much power and what frequencies are required to send data back to Earth or to other satellites. The satellite, which has AI aboard, is constantly doing this so that signals can pass through as it travels through space.

AI-Based Assistants and Robots

Scientists are working on artificial intelligence-based assistants to support astronauts on missions to the Moon, Mars, and beyond. These assistants are designed to anticipate and understand the crew’s needs, as well as understand astronauts’ emotions and mental health and take appropriate action in the event of an emergency. So, how do they accomplish this? Sentiment analysis is the solution.

Sentiment Analysis is a branch of Natural Language Processing (NLP) that aims to recognise and extract opinions from a given text in places like blogs, reviews, social media, forums, and news. Robots, on the other hand, can be more useful when it comes to physical assistance, such as assisting with spacecraft piloting, docking, and handling harsh situations that are dangerous to people. The majority of it may seem speculative, but astronauts will benefit much from it.

Data Annotation for Space AI

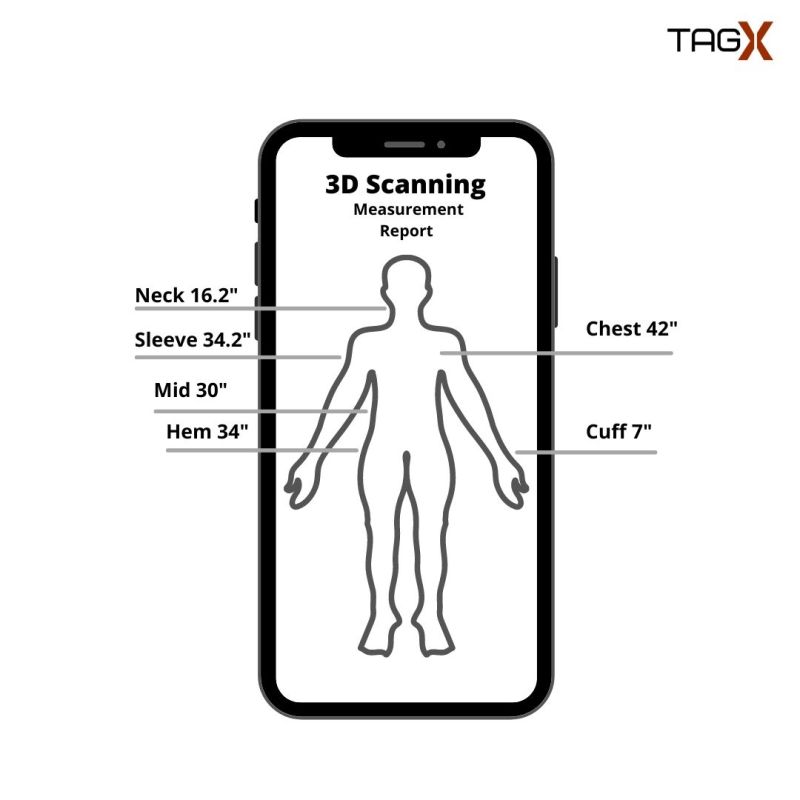

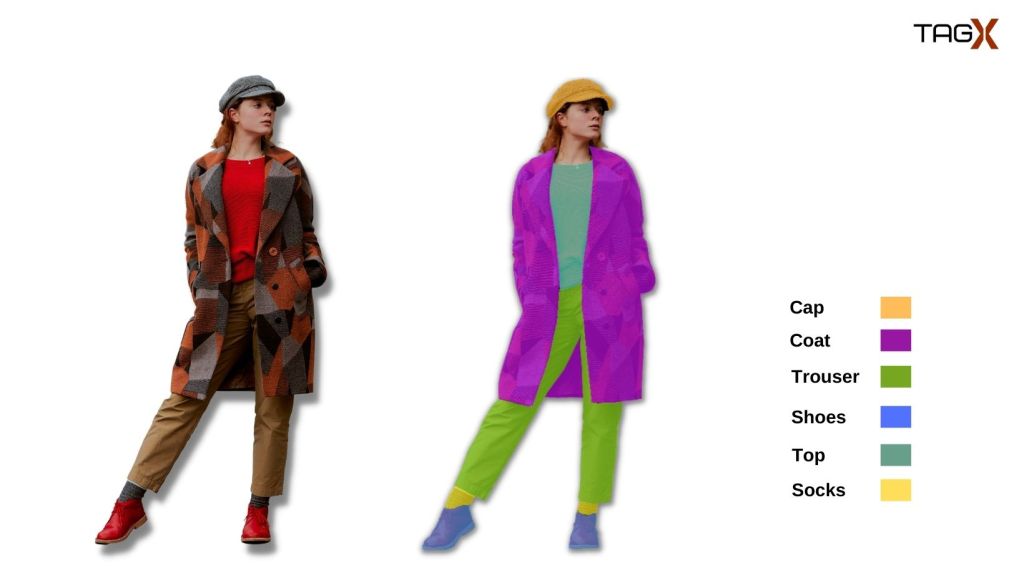

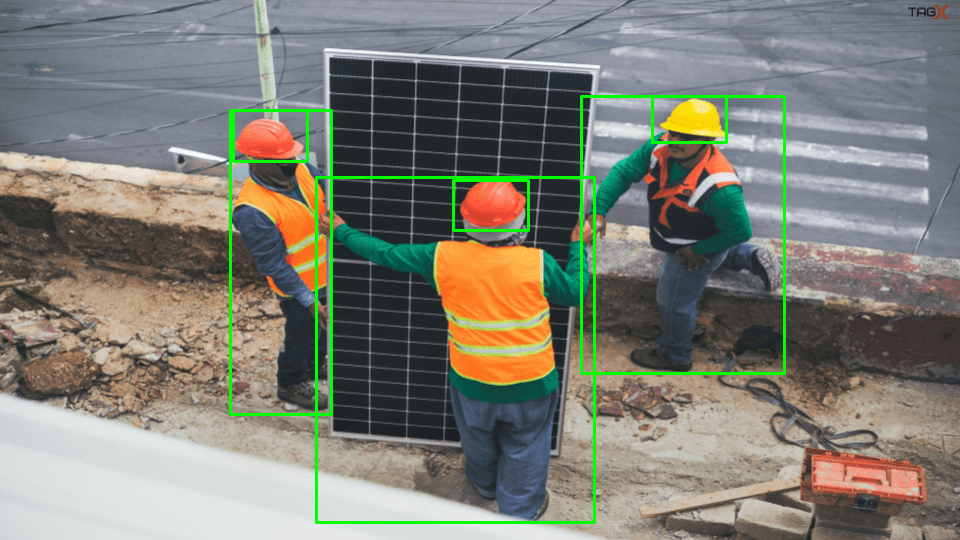

Satellite imagery, which is commonly utilized in remote sensing, provides an unprecedented technique of capturing the Earth’s surface. The satellite’s images are then analyzed using a variety of Computer Vision techniques, including Data Annotation, in which every portion of the image is detected and essential features retrieved. This allows scientists to create predictive models for specific remote sensing applications, such as detecting and preventing natural disasters.

Although, like other applications of AI, nothing can be concrete and secure with AI; however, the technology of artificial intelligence is showing clear potential in exploring the interstellar space with innovative machines and projects. With each innovation, technology is coming closer to providing newer insights and proving to be an advantage for humans.

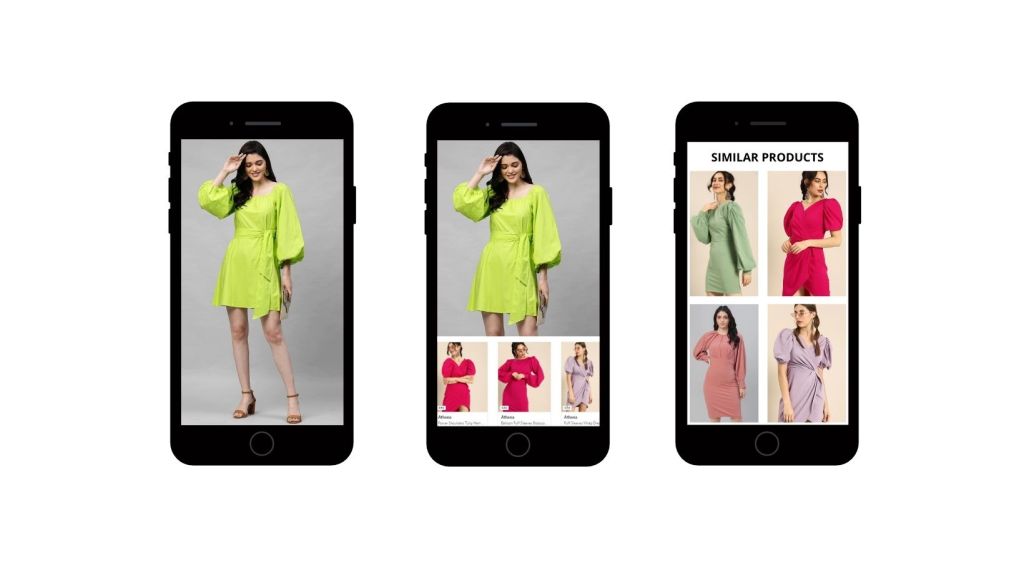

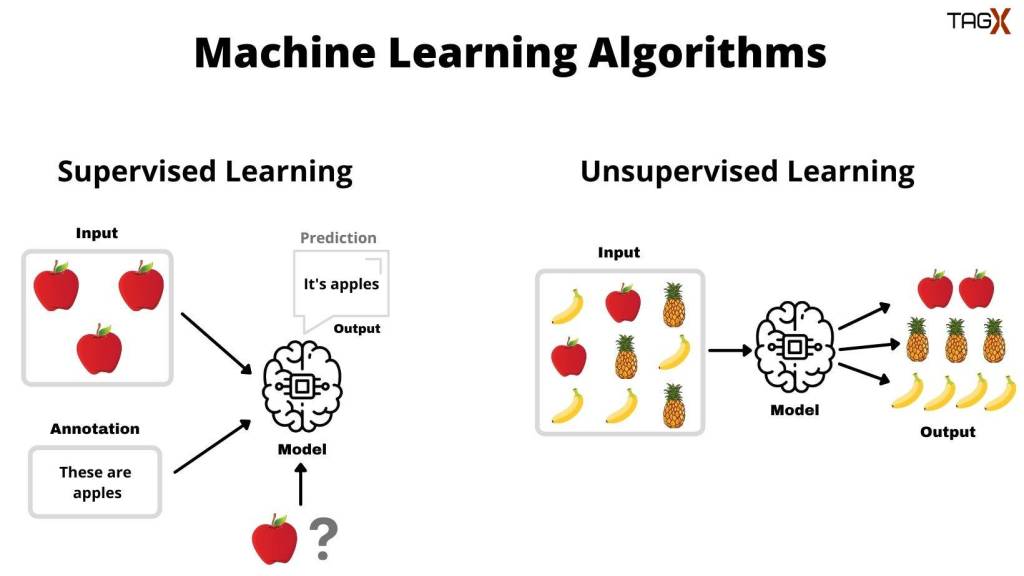

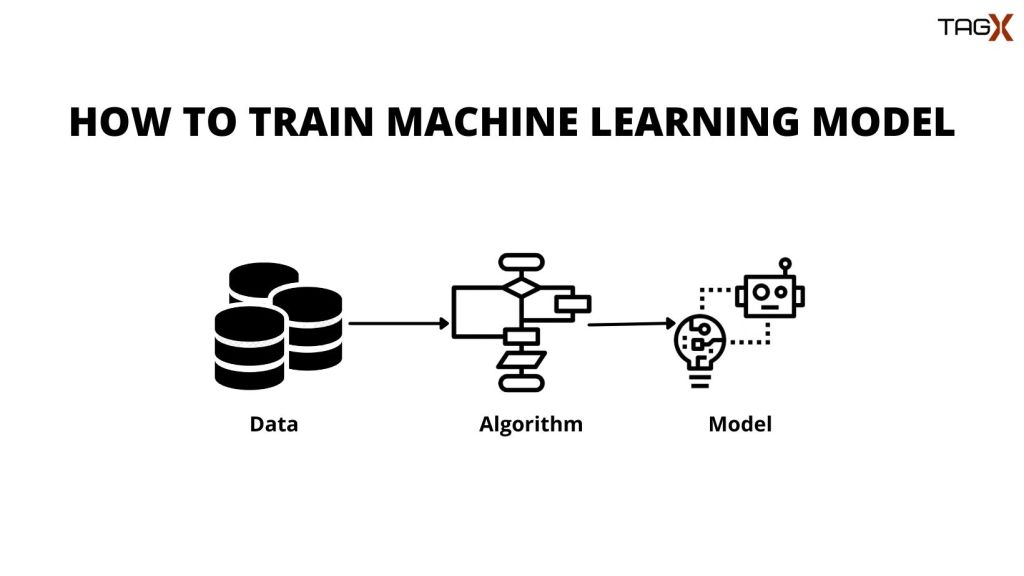

While all AI technologies require training datasets, the machines cannot learn from raw data in its original form. This is why raw datasets need to be prepared with various data annotation methods. Since this is a very time-consuming process, companies developing AI products often outsource this work to third-party service providers who can annotate millions of images and videos, like TagX.

TagX provides you with high-quality training data by integrating our human-assisted approach with machine-learning assistance. Our text, image, audio, and video annotations will give you the courage to scale your AI and ML models. Regardless of your data annotation criteria, our managed service team is ready to support you in both deploying and maintaining your AI and ML projects.