Artificial intelligence (AI) is a broad field of computer science involving the development of computer systems that are capable of performing tasks that typically require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. AI is used in a variety of applications, including robotics, natural language processing, expert systems, gaming, and virtual reality. AI is also used in medical diagnosis, self-driving cars, financial trading, and other areas.

The Glossary

1. Artificial Intelligence (AI): Refers to the ability of computers or machines to imitate or simulate human intelligence and behavior.

2. Machine Learning (ML): A subset of Artificial Intelligence (AI) that enables machines to learn from experience and data without being explicitly programmed.

3. Neural Network: A type of machine learning algorithm that is based on a network of interconnected nodes.

4. Natural Language Processing (NLP): A subfield of Artificial Intelligence (AI) that is concerned with the understanding of human language.

5. Deep Learning: A subset of Artificial Intelligence (AI) that uses multi-layered artificial neural networks to learn from large amounts of data.

6. Cognitive Computing: A type of Artificial Intelligence (AI) that is based on the principles of cognitive science and aims to understand and replicate human thought processes.

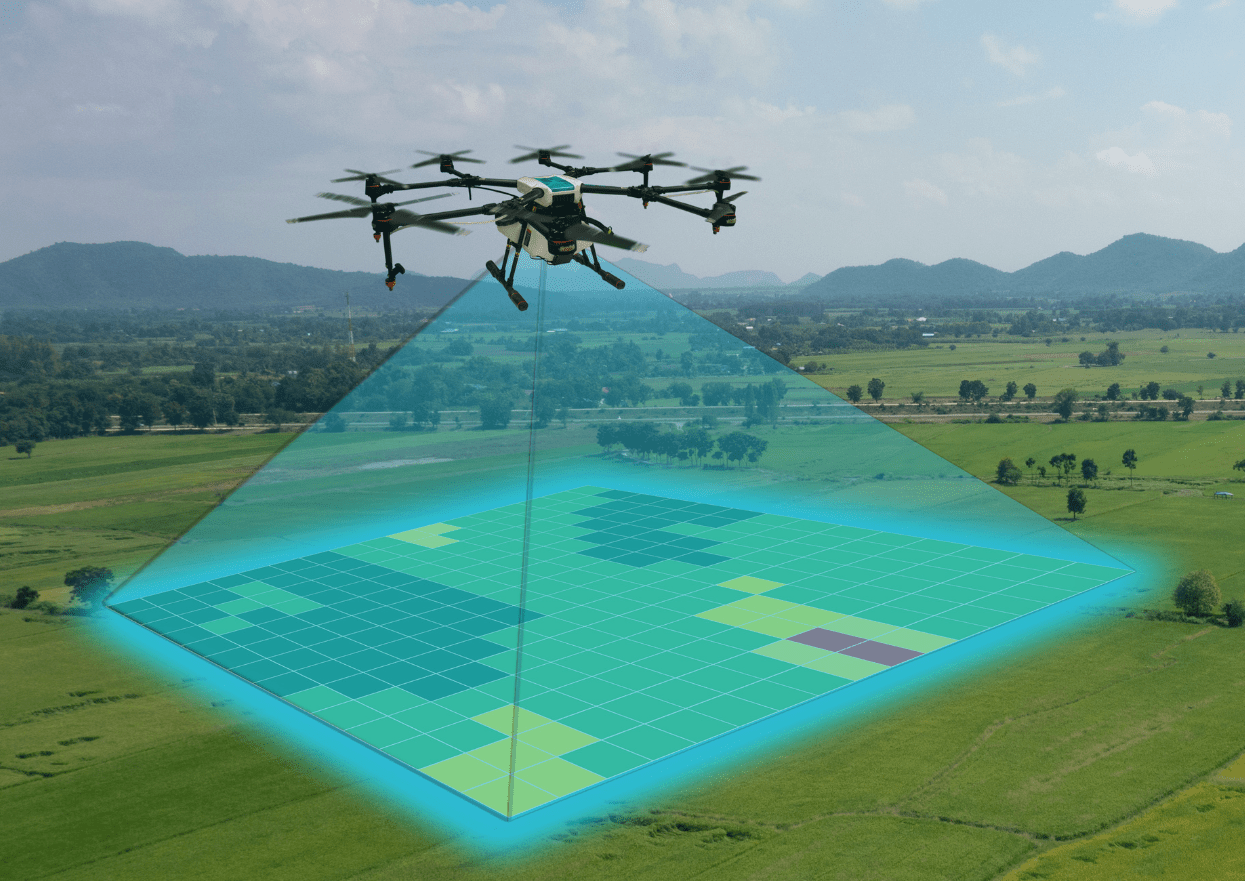

7. Robotics: The science and technology of designing, constructing, and operating robots.

8. Autonomous Agents: Artificial Intelligence (AI) systems that are able to act independently of external instruction.

9. Algorithm: A set of instructions that a computer follows to solve a problem or perform a task.

10. Classification: A type of supervised machine learning task in which data is divided into classes based on certain characteristics.

11. Data Mining: The process of extracting meaningful patterns or knowledge from large datasets.

12. Feature Engineering: The process of creating additional features from existing data.

13. Hyperparameter: A parameter used to control the behavior of a machine learning model.

14. Loss Function: A measure of how far the model’s prediction is from the true value.

15. Neural Network: A kind of machine learning model composed of multiple layers of neurons.

16. Optimization: The process of finding the parameters that lead to the best performance of a machine learning model.

17. Training Set: A subset of the data used to train a machine learning model.

18. Feature Extraction: This term refers to the process of extracting meaningful information from a set of data, such as an image. An example of feature extraction in computer vision would be extracting edges, corners, or other characteristics from an image.

19. Image Segmentation: This term refers to the process of dividing an image into multiple regions or segments. It is used in computer vision to identify important objects or features in an image.

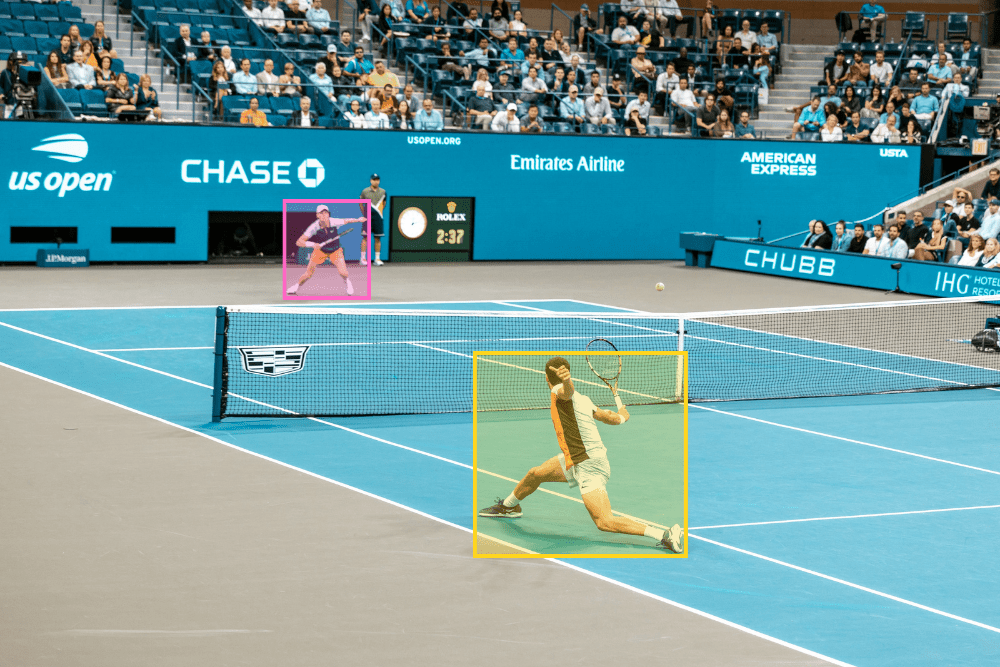

20. Object Detection: This term refers to the process of identifying and locating objects in an image. It is used to detect objects of interest in an image and can be used for various tasks such as facial recognition, object tracking, and image classification.

21. Object Recognition: This term refers to the process of recognizing an object in an image. It is used to identify objects in an image and can be used for various tasks such as facial recognition, object tracking, and image classification.

22. Image Classification: This term refers to the process of categorizing an image into one or more predefined classes. It is used in computer vision to classify objects in an image and can be used for various tasks such as object detection, object tracking, and image recognition.

23. Corpus: A corpus is a large collection of text data used to train and develop natural language processing algorithms.

24. Tokenization: Tokenization is the process of breaking down a text into its smallest elements, such as words and punctuation.

25. Word Embedding: Word embedding is a technique for representing text as numerical vectors (usually in a high-dimensional space) which capture the context of the words in the text.

26. Named Entity Recognition: Named entity recognition (NER) is the process of automatically identifying and classifying entities (such as names, locations, organizations, etc.) from a given text.

27. Part-of-Speech Tagging: Part-of-speech tagging is the process of assigning a part of speech (such as a noun, verb, adjective, etc.) to each token in a given text.

28. Syntactic Parsing: Syntactic parsing is the process of analyzing a sentence to determine its syntactic structure, such as its constituent phrases and the relationships between them.

29. Semantic Analysis: Semantic analysis is the process of determining the meaning of a text by analyzing the words and sentences within it.

30. Autonomous Agents: A type of AI system that can act independently and make decisions without direct input from a human operator.

31. Expert System: A computer program that uses expert knowledge and reasoning to solve complex problems.

32. Reinforcement Learning: A type of machine learning where rewards are used to encourage desired behaviors.

33. Deep Learning: A type of machine learning where algorithms are designed to learn from large amounts of data.

34. Chatbot: A computer program designed to simulate conversation with human users.

35. Dialog Flow: A natural language processing library that enables the development of conversational user interfaces.

36. Intent Recognition: The process of recognizing user intent from spoken or written input.

37. Entity Extraction: The process of extracting relevant data from a user’s input.

38. Knowledge Representation: The process of representing knowledge in a structured form.

39. Chatbot Platform: A platform that provides the infrastructure needed to develop and deploy chatbot applications.

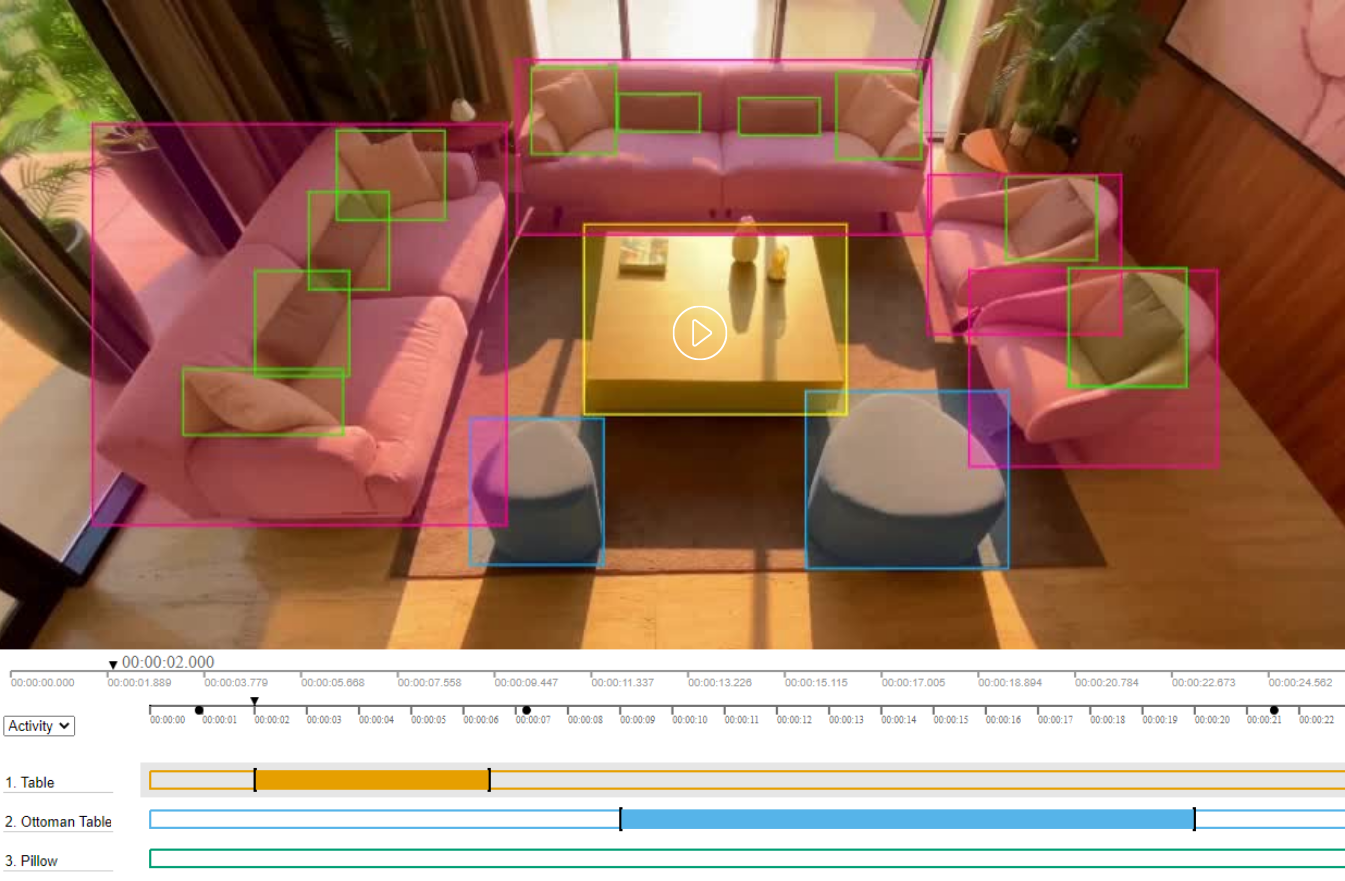

40. Chatbot Framework: A framework that provides the necessary tools and components to build chatbot applications.

41. Data Annotation: Data annotation is the process of labeling and providing structured information about data in order to facilitate easier understanding and interpretation. This is done by providing metadata, which may include labels, descriptions, and other contextual information. Data annotation is essential for machine learning applications and is used to train, evaluate, and improve algorithms.

42. Data Labeling: Data labeling is the process of assigning labels to data points. Labels are typically used to provide additional information about the data. For instance, labels may be used to indicate the class or category of a data point, the person or object in an image, the sentiment of a text, or the intent of a voice command. Data labeling is a critical step in the development of machine learning models, as it helps the model learn to recognize patterns in the data.

43. Data Type: A data type is a classification that specifies which type of value is stored in a variable or a database field.

44. Validation: Validation is the process of ensuring that data is valid and meets certain criteria before it is accepted.

45. Categorization: The process of assigning labels or categories to data points based on the characteristics of the data.

46. Ground Truth: A set of data points that have already been labeled and can be used as a reference to evaluate the accuracy of a machine learning model.

47. Test Data: Data points used to test a machine learning model.

48. Quality Check: In data annotation, quality checking is an important step to ensure accuracy and consistency in the data. It begins with reviewing the data annotation guidelines to ensure that the annotators are following the same rules. Additionally, a sample of the annotated data can be reviewed to ensure that the data is accurately labeled and that any mistakes are corrected.